Modern search engines let us find the answer to practically any question in the ever-growing ocean of internet data within a couple of seconds. How is it possible? What stands behind such a lightning-fast search process? In this article, we will talk about artificial intelligence search technologies that quickly help satisfy the user’s informational needs and provide the most relevant recommendations.

AI Search Techniques in Detail

Content search is a sequence of steps for analyzing all the available data sources and extracting the most relevant solutions for certain queries. Digital search systems are constantly evolving and improving to provide users with results that are as accurate as possible. The ideal search system is one that presents only relevant information that fully complies with queries. For this reason, the leading engines started to implement artificial intelligence algorithms in their search practices.

Artificial intelligence is the technology that endues computers with cognitive abilities and, simply put, teaches them to behave like humans. The AI industry is definitely on the rise now:

- In 2018, research by Adobe demonstrated that 15% of enterprises had already adopted AI components in their business, while 31% of companies were going to implement them within the next 12 months.

- As for the global spending on artificial intelligence, it is going to reach $57.6 billion by 2021 with the CAGR of 50.1%, according to the International Data Corporation.

- Stanford University found out that the number of active startups related to AI-based innovations has increased by 1,400% since 2000.

Machine intelligence is applied in various fields, from manufacturing and medicine to education and entertainment. However, it probably has the greatest impact on the development of AI-powered search technology.

The Evolution of Search Systems

The World Wide Web Wanderer created in 1993 is considered to be the first internet search system in the world. The only purpose of this robot was to find all the web pages containing keywords from the user’s query.

As the number of documents on the internet grew, there emerged the necessity to rank relevant pages. For page ranking, search robots started to take into account not only the keywords from the query but also the frequency of these words and their importance in the context of the document.

The statistical measure TF-IDF appeared:

- TF (term frequency) — the ratio of the number of occurrences of a word to the total number of words in the document to assess the importance of the word within a single document.

- IDF (inverse document frequency) — the inverse of the frequency with which a word occurs within a specific collection of documents. This approach reduces the weight of widely used words.

Google Search Engine appeared in 1998 with the innovative backlink ranking algorithm, PageRank. The essence of this tool is that the importance of the page is evaluated by the machine depending on the number of hyperlinks to it. Pages with the highest number of backlinks are pushed to the top rank. By the way, backlinks are one of the strongest Google ranking factors.

In the early 2000s, search algorithms began to use machine learning. Initially, systems were trained on the basis of samples drawn up by special people — assessors. Later on, the gradient boosting technique joined the game for regressing and classifying problems. The method produces forecasting models in the form of a decision tree, allowing you to handle heterogeneous data. It is effective when thousands of users enter identical requests.

However, the search process was evolving toward unique low-frequency verbose queries. In 2013, Google created Word2Vec — a set of models for semantic analysis. It provided the basis for a new artificial intelligence search technology RankBrain that was launched in 2015. This self-learning system has the ability to establish links between separate words, extract hidden semantic connections and understand the meaning of the text.

As of today, search engine algorithms work on the basis of neural networks and deep learning that find pages matching the query not only by the keywords but also by the meaning. The main advantage of neural networks over traditional algorithms is that they are trained but not programmed. Technically, they are able to learn, i.e. to detect complex dependencies between the input data and the output, as well as to generalize (like the human brain does building connections between neurons).

The core task of all AI search techniques is to improve the understanding of complex verbose queries and to provide the correct result even when the input information is incomplete or distorted.

Top Content Search Engines

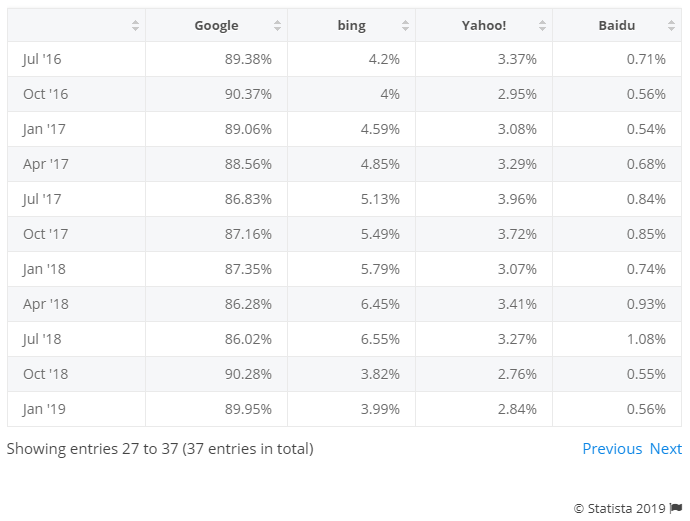

Google has been an undeniable leader in the market of search engines since its creation to this day. The position is proven by Statista’s data.

Worldwide desktop market share of leading search engines

Source: Statista

To the surprise of many, there is a number of popular search systems besides Google, and they handle millions of queries every day. They are:

- Bing — the default search system for Windows PCs from Microsoft; holds second place in the market.

- Yahoo — the default search system for Firefox in the US; search-related services of Yahoo company are powered by Bing and Google.

- Baidu — the most popular search engine in China; it is ranked the fourth position in the world.

- Yandex — the most popular search engine in Russia.

- DuckDuckGo — the system prioritizes user privacy, doesn’t store IP addresses and is not loaded with ads.

Still, none of these platforms is able to measure up to Google.

Read also: Machine Learning in Business: 8 Use Cases

AI-Based Recommender Systems

The analysis of search history and user activity on the internet serve as the foundation for personalized recommendations that have become a powerful marketing tool for the eCommerce industry and online businesses.

Along with AI search methods, recommendation engines are based on artificial intelligence technology and are gaining momentum. The difference lies in their working methods: recommendation systems don’t use explicit queries but rather analyze user preferences to recommend goods or services that may be of interest. To foresee the needs of a certain customer, a recommender takes into account the following factors:

- Previously viewed pages

- Previous purchases

- User profile (where age, gender, profession, hobbies are indicated)

- Similar profiles of other users and their interactions

- Geographical location

Thus, a recommendation engine is a filtering system that combats information overload and extracts to-the-point content tailored to each customer’s needs. Both online buyers and sellers benefit from AI recommenders:

- Buyers discover items that they may not find by themselves

- Sellers encourage upselling and cross-selling, increasing their revenues

Top Content Recommender Systems

Here is the list of the best practices of the application of recommendation systems:

Video:

- Youtube.com

- Netflix.com

Audio:

- Music.yandex.ru

- Apple.com/lae/apple-music/

- Spotify.com

News:

- Zen.yandex.ru

- Pulse.mail.ru

Friends:

- Yandex.ru/aura/promo/

Top Artificial Intelligence Search Technologies for Better Customer Experience

Customer satisfaction is the pillar of excellence for numerous retail and service businesses. To attract and retain as many clients as possible, large and small companies implement AI solutions that can provide customers with the required information, help them complete tasks or give recommendations. Take a look at the top AI-powered search technologies used for interaction with clients.

Chatbots

These are pre-trained programs that can reply to a certain set of questions – FAQ, as a rule. There are three types of chatbots:

- Chit-chat — focuses on informational social chat

- Informational — finds information or directly answers user questions

- Task-oriented — helps users finish a specific task, such as booking or canceling a ticket

Chatbots are usually text-based and can also interact with visual and audio content. They don’t have high language processing skills, so they may fail to respond to statements they are not trained to. That’s why they can’t keep a long conversation.

Virtual Assistants

In comparison with chatbots, virtual assistants are more sophisticated platforms that work on the basis of artificial intelligence neural networks. Due to natural language processing (NLP) and natural language understanding (NLU), they can learn from the situation, keeping long conversations and performing complicated tasks, e.g. comparing products.

Contextual Analysis

Online stores develop applications powered with complicated AI-based algorithms that can predict user needs considering the purchase history and depending on the time or location.

For instance, an app automatically orders (or recommends you to order) sugar or flour after calculating that you will likely run out of these products today. What’s more, it can send you a notification to drop in a coffee bar/order a coffee when you approach its location (and in case you drink coffee regularly). This practice, called just-in-time sales, helps increase sales significantly and simplifies many routine tasks of ordinary users.

Frameworks for Developing AI, ML, DL Solutions

To successfully create and deploy projects with intelligent search and AI technologies for your business, developers have to choose the appropriate frameworks. Each framework serves a certain purpose, has its own features and functions.

Microsoft Cognitive Toolkit (CNTK)

CNTK is an open-source set of tools for design and development of networks of various types. It helps work with large amounts of data through deep learning and provides effective training of models for voice, image and handwriting recognition. CNTK can be used as a separate machine learning tool or can be compatible with Python, C#, C++, Java programs.

Read also: Microsoft Azure Cognitive Services: Why Is It a Leader on the Cognitive Computing Market?

TensorFlow

This is one of the best open-source libraries used for voice and image recognition or in text applications. The framework was developed by Google and is written in C++ and Python. It is perfect for complex projects, e.g regarding multilayered neural networks creation.

PyTorch

Created by Facebook, this tool is mainly used to train models quickly and efficiently. It has numerous ready-made trained models and modular parts that are easy to combine. The main advantage is the simple and transparent model creation process.

MXNet

This highly scalable deep learning framework was created by Apache and is used by large companies and global web-services mainly for speech and handwriting recognition, natural language processing (NLP) and forecasting. MXNet supports a range of popular languages, including Python, C++, JavaScript, R, Julia.

DL4J

Deeplearning4j is a commercial open-source platform written mainly for Java and Scala. The framework is a good choice for projects concerning image recognition, natural language processing, vulnerability search and text analysis. DL4J supports various types of neural networks and can handle large amounts of data without lowering the speed.

SaM Solutions Delivers Personalized Experiences

Our partnership with Coveo — a company that provides AI-powered search and recommendation technologies, gives an opportunity to dive into the world of developing smart websites, apps and CRM systems for our clients.

SaM Solutions actively uses a special product — Coveo for Sitecore — in our current Sitecore projects, resulting in improved search results, higher conversions and customer satisfaction.

Summing Up

Artificial intelligence and big data analytics are taking root in our everyday lives, producing significant transformations.

Content search and recommendation practices become more and more human-like with the help of AI algorithms.

Customer satisfaction is enhanced with the improvement of the quality of search results.

If you have a project idea that requires the implementation of smart search and recommendation algorithms, don’t hesitate to contact us to discuss.

The Latest 15 Information Technology Trends in 2024

The Latest 15 Information Technology Trends in 2024 Top 10 Embedded Software Development Tools

Top 10 Embedded Software Development Tools IaaS vs. PaaS vs. SaaS: What’s the Difference?

IaaS vs. PaaS vs. SaaS: What’s the Difference? 10 Examples of Predictive Analytics

10 Examples of Predictive Analytics