Verifiable AI: Building Trust Through Transparency, Proof, and Accountability

(If you prefer video content, please watch the concise video summary of this article below)

Key Facts

- Trust is now mandatory. Regulators, customers, and courts expect proof that AI works as intended.

- Verifiable AI adds evidence. It combines explainability, audit trails, data lineage, cryptography, and secure hardware.

- Compliance drives adoption. Laws like the EU AI Act and frameworks like NIST AI RMF require traceability and accountability.

- Security goes beyond accuracy. Cryptographic proofs and secure enclaves protect models from tampering and fraud.

- Transparency builds confidence. When decisions can be inspected and verified, people are more willing to trust AI.

Artificial intelligence (AI) permeates almost every sector — from predicting credit risk to diagnosing disease and generating creative content. Yet its rapid adoption has outpaced mechanisms that prove AI is working as intended. Black-box models can produce errors, replicate hidden biases, and be exploited for fraud, while generative models make it difficult to distinguish authentic media from deepfakes.

Regulators worldwide are responding with new laws and frameworks that demand explainability, accountability, and transparency in artificial intelligence. Organisations can no longer rely on performance alone; they must be prepared to prove that their AI was trained on legitimate data, operates safely, respects privacy, and produces auditable outputs. Verifiable AI supplies this proof.

By layering cryptographic techniques, secure hardware, and robust audit trails on top of traditional AI systems, verifiable AI creates a foundation of trust that can withstand legal scrutiny and public skepticism.

What Is Verifiable AI?

Verifiable AI is a paradigm that combines transparent design with mathematical proof to enable independent verification of AI operations. According to the digital identity foundation Identity.org, verifiable artificial intelligence systems include four core pillars: auditability (recorded history of decisions), explainability (ability to interpret how decisions are made), traceability (tracking data and model lineage), and security (protection against tampering).

Leverage AI to transform your business with custom solutions from SaM Solutions’ expert developers.

Why Verifiable AI Is Essential Now

Let’s answer a very acute question of why verifiable artificial intelligence is so important these days. There are a few good reasons for that:

Accountability and reduced liability

As organisations deploy artificial intelligence into mission-critical workflows, failures now carry legal, financial, and reputational consequences. Verifiable AI introduces concrete safeguards that traditional ML pipelines often lack:

- End-to-end traceability of data sources, model versions, and inference outputs

- Immutable audit logs that record every prediction and decision path

- Evidence for legal defence, allowing enterprises to demonstrate how and why an AI system behaved as it did

- Continuous model integrity checks, reducing risks from stale data, broken pipelines, or unvalidated updates

Instead of opaque outcomes, verifiable systems expose decision logic, turning compliance reviews into constructive diagnostics rather than post-incident investigations.

Mitigating bias and discrimination

Bias remains one of the most persistent risks in artificial intelligence, particularly in high-impact domains such as hiring, lending, and healthcare. Verifiable AI enables organisations to operationalise fairness principles rather than treating them as abstract goals:

- Complete data lineage tracking, showing exactly when and where biased data entered the pipeline

- Model versioning and reproducibility, enabling auditors to compare behaviour across iterations

- Ongoing monitoring, rather than one-time pre-deployment testing

- Human-override mechanisms, ensuring automated decisions can be reviewed and contested

By making bias detectable and explainable, verifiable AI turns ethical requirements into enforceable engineering practices.

Meeting global AI regulations

AI regulation is advancing rapidly worldwide, with most frameworks converging on transparency, accountability, and risk-based controls. Verifiable AI provides a common technical foundation to meet these requirements across jurisdictions:

- Decision logging and traceability for high-risk systems

- Technical documentation generated directly from the model and data metadata

- Proof of human oversight, auditability, and system robustness

- Disclosure mechanisms for AI-generated content and automated interactions

Rather than custom compliance per region, verifiable artificial intelligence allows organisations to build once and comply globally.

Security and fraud prevention

AI has become both a defensive tool and an attack surface. Deepfakes, model tampering, and data poisoning have increased dramatically, demanding stronger verification mechanisms:

- Cryptographic provenance to track content creation, modification, and ownership

- Immutable inference records, preventing silent manipulation of AI outputs

- Explainability combined with privacy, enabling regulatory inspection without data exposure

- Secure execution environments, isolating AI workloads from unauthorised access

These controls make AI systems resilient not just to errors, but to deliberate abuse.

Building public trust

Without transparency, even accurate AI systems struggle with adoption. Verifiable AI strengthens confidence by making systems inspectable, contestable, and accountable:

- Clear visibility into data sources and model behaviour

- Validation workflows that allow professionals to confirm AI outputs before action

- Public-facing audit summaries, improving institutional credibility

- Appeal and review mechanisms, reinforcing human agency

In high-stakes environments — from healthcare to public services — verifiability transforms AI from a black box into a trusted collaborator.

Core Components of Verifiable AI Systems

Let’s explore the core components of verifiable AI systems:

Explainability makes artificial intelligence decisions understandable by linking outputs to the factors that influenced them. In verifiable AI, explanations are cryptographically bound to the exact production model, ensuring auditors analyse the real system, not a theoretical one.

Audit trails provide an immutable record of how data, models, and decisions evolve. They enable reconstruction of artificial intelligence behaviour, support regulatory oversight, and prevent undetected tampering.

Traceability connects data origins, model versions, and individual decisions into a continuous lineage. This visibility reduces bias, supports privacy compliance, and allows independent verification without exposing sensitive data.

Cryptographic techniques such as zero-knowledge proofs and trusted execution environments prove that AI computations are genuine and untampered. They enable verification of results while keeping data and models confidential.

Reproducibility ensures that identical inputs and conditions yield identical outputs. It is essential for continuous verification, auditing, and long-term compliance of AI systems.

The Verifiability Technology Stack

The sections below outline the key building blocks that make AI systems verifiable in practice, not just in theory.

Verifiable credentials (VCs) are tamper‑evident digital statements issued by a trusted authority. The GS1 technical landscape report defines VCs as cryptographically secure attestations that represent identity documents, qualifications, or other claims. They are portable, privacy-preserving, and can embed standards such as certifications or legal requirements.

VCs are built on open standards so they can interoperate across systems; they are typically signed using cryptographic keys and can include metadata describing the issuer, subject, and validity period. VCs simplify regulatory compliance by allowing AI agents to prove that data or models come from authorised sources.

Zero-knowledge proofs allow one party to prove the validity of a statement to another without revealing any additional information. In artificial intelligence, zero-knowledge machine learning (ZKML) can prove that a particular model produced a specific output from a particular input without disclosing the input or the model’s parameters. Verification is cheap: a proof can be checked in milliseconds, while the heavy computation occurs off-chain or on secure servers.

Although generating proofs has historically incurred massive overheads (hundreds of thousands of times slower than native inference), recent advances have reduced the cost to within several orders of magnitude and continue to improve. ZKPs can be combined with TEEs for hybrid verifiability — TEEs safeguard the computation and keys, while ZKPs provide mathematical proof that nothing nefarious occurred.

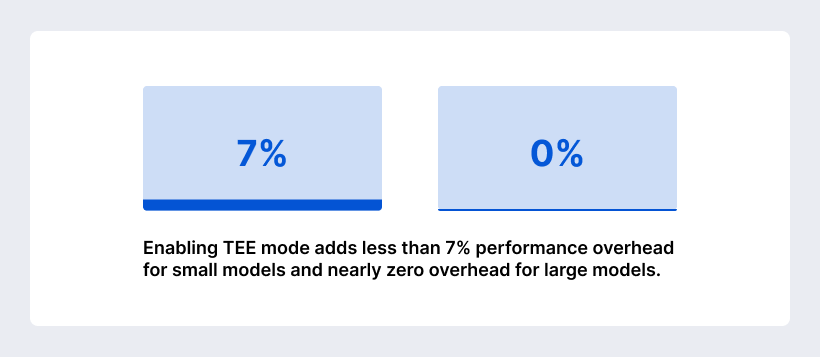

TEEs create isolated execution environments within a processor. They ensure that sensitive code and data are encrypted and inaccessible to the operating system or hypervisor and provide cryptographic attestation to prove that a computation ran in a genuine, untampered environment. TEEs like Intel SGX, AMD SEV, and Nvidia confidential GPUs support artificial intelligence training and inference. Benchmarks show that enabling TEE mode adds less than 7% performance overhead for small models and nearly zero overhead for large models because encryption overhead diminishes as computation scales. TEEs protect AI models from theft or tampering and provide evidence that computations were executed as claimed.

The Content Authenticity Initiative (CAI) and the Coalition for Content Provenance and Authenticity (C2PA) design open standards for establishing the origin and edit history of digital content. Splunk notes that C2PA provides an industry-wide standard and that digital provenance is critical for combating deepfakes and misinformation.

Infosys explains that C2PA emerged after research concluded that watermarking and steganography alone were insufficient; C2PA attaches manifests — text files containing assertions, claims, and signatures — to media assets, enabling audiences to review modification history and confirm authenticity. CAI advocates for the adoption of these standards and develops open‑source tools in multiple languages. By integrating content credentials into media generation and distribution pipelines, AI developers can signal whether content was AI‑generated and ensure tamper‑evident editing, building trust in digital media.

Decentralised identifiers (DIDs) are portable, URL-based identifiers that enable self‑sovereign identity. The GS1 report describes DIDs as globally unique identifiers that allow individuals, organisations, or devices to have self-sovereign, verifiable identities. The Cheqd foundation elaborates that self‑sovereign identity centres control of information around the user; DIDs remove the need for central databases and give individuals control over what information they share.

DIDs are most often used as trusted identifiers written into verifiable credentials and can be easily ported between repositories. A DID resolves to a DID Document, which lists controllers and public keys associated with the identifier. Combining DIDs with VCs and ZKPs allows AI agents to prove their identity and attest to their models and data without relying on centralised authorities.

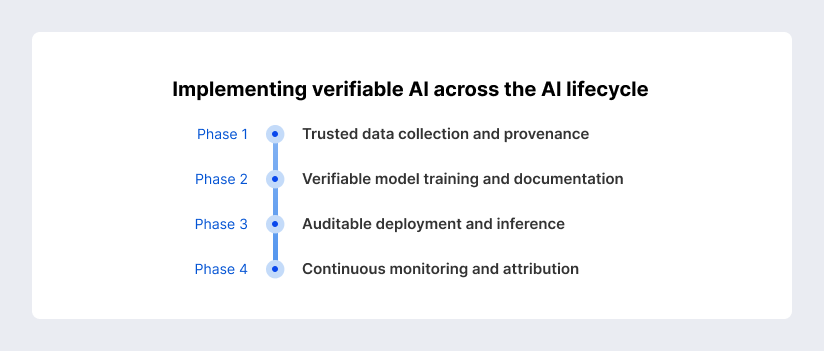

Implementing Verifiable AI Across the AI Lifecycle

Verifiability cannot be bolted onto artificial intelligence at the very end. It must be designed into every stage of the lifecycle — from the moment data is collected to the way models are monitored years after deployment.

Phase 1: Trusted data collection and provenance

AI systems inherit both value and risk from their data. Verifiable AI starts by making data origins and usage transparent and auditable:

- Data origin tracking, capturing where data comes from, how it was collected, and under what conditions

- Consent and permission logs, allowing individuals to see when their data is used and revoke access

- Usage and access metadata, documenting who accessed data, for what purpose, and for how long

- Embedded metadata and digital signatures at ingestion, ensuring later proof of authenticity and integrity

By building provenance directly into data pipelines, organisations establish a reliable foundation for downstream verification

Phase 2: Verifiable model training and documentation

Training is where models acquire both intelligence and risk. Verifiability at this stage ensures results are reproducible, explainable, and defensible:

- Comprehensive model lineage, including architectures, hyperparameters, and training configurations

- Dataset versioning, linking each model to the exact data used during training

- Immutable metadata logs, secured with cryptographic identifiers

- Secure training environments, protecting sensitive data while preserving auditability

- Fairness, robustness, and performance evaluations, documented for regulatory alignment

Integrated tooling allows training pipelines to remain transparent without exposing proprietary or sensitive assets.

Phase 3: Auditable deployment and inference

Once models are live, every decision they make must be verifiable in retrospect. Deployment is where accountability becomes operational:

- Inference-level logging, recording timestamps, inputs, outputs, and model versions

- Append-only or immutable logs, enabling third-party verification without data disclosure

- Protection against tampering, ensuring deployed models cannot be silently replaced or altered

- Execution proofs, confirming that models ran as intended

- Explainability dashboards, allowing domain experts to interpret and validate outcomes

For generative AI, provenance metadata can be embedded directly into outputs to signal authenticity and origin.

Phase 4: Continuous monitoring and attribution

AI systems evolve over time — often unintentionally. Verifiable AI extends beyond deployment to ensure ongoing reliability and accountability:

- Continuous performance monitoring, detecting drift, bias, and degradation

- Automated alerts and corrective records, documenting when and how issues were addressed

- Updated audit trails, preserving a full history of model changes and interventions

- Content lineage tracking, showing how AI-generated outputs are modified downstream

- Clear attribution, identifying teams or systems responsible for decisions and outcomes

This phase ensures AI remains trustworthy not just at launch, but throughout its operational lifespan.

Real-World Applications and Use Cases of Verifiable AI

Verifiable AI is not an abstract compliance concept. It is already shaping how high-stakes AI systems are designed, deployed, and trusted across industries where errors, bias, or fraud carry real consequences. These use cases show how verifiability moves from theory into day-to-day operations — protecting people, organisations, and public institutions.

Healthcare: Verifying diagnostic models and patient data consent

GE Healthcare’s Edison platform provides a concrete example: it includes model traceability and audit logs that allow medical staff to validate AI diagnoses before applying them. TEEs can protect diagnostic models and patient data during inference, while ZK proofs let hospitals show regulators that approved models were used without revealing patient records. Verifiable AI also supports patient autonomy by capturing consent events and allowing patients to revoke consent, meeting data-privacy principles of the AI Bill of Rights.

Finance: Auditable fraud detection and compliant algorithmic trading

Immutable audit logs of model inputs and outputs, combined with real-time monitoring, can prove that trading algorithms operated on up-to-date and authorised data. TEEs prevent tampering, while ZKPs can prove compliance with risk management rules without exposing trading strategies. Financial institutions like JPMorgan already combine explainability tools (e.g., SHAP) with audit records so regulators can inspect fraud-detection models. Such practices not only meet compliance but also reduce liability and protect shareholder value.

Content creation: C2PA and combating deepfakes

Generative AI has democratized content creation but also flooded media channels with deepfakes and misinformation. Infosys notes that the rise of generative AI has led to a tenfold increase in deepfakes across industries, exposing businesses to fraud and reputational damage.

Organisations such as the BBC now use digital signatures linked to provenance information so audiences can confirm the source of media. C2PA standards address this by attaching manifests to media assets containing assertions, claims, and cryptographic signatures. These manifests can indicate whether an asset was AI-generated and list all modifications.

Enterprise AI: Validating RAG systems and internal knowledge bases

Retrieval-augmented generation (RAG) systems inject documents into large-language-model prompts to ground answers in factual sources. Morphik’s 2025 guide explains that RAG has become a board-level priority because it enables enterprises to unlock multimodal content repositories; the RAG market reached $1.85 billion in 2024 and is growing at 49% CAGR.

RAG addresses hallucination and compliance challenges by forcing models to base answers on actual documents. By returning citations — complete with page numbers, section headers, and quotes — RAG systems create an audit trail that meets governance standards.

Public sector: Transparent decision-making and regulatory reporting

Governments face unique pressures to ensure AI systems are fair, transparent, and accountable. The EU AI Act requires public sector agencies to register high-risk AI systems in a public database and provide technical documentation, risk assessment, and logs for audit. The UK’s AI White Paper emphasises cross-sector principles: safety, transparency, accountability, contestability, and flexibility. Canada’s AIDA mandates risk assessments, transparency, human oversight, and robustness for high-impact systems.

The Global Landscape: Regulations, Standards, and Frameworks

Verifiable AI is being shaped as much by law and policy as by technology. Governments and industry bodies are converging on a common expectation: AI systems must be explainable, auditable, and accountable by design. Understanding this global landscape is essential for organisations building AI that must operate across borders and regulatory regimes.

EU AI Act and regulatory push for high-risk AI

The EU AI Act represents the most comprehensive and prescriptive AI regulation to date. It introduces a risk-based classification system and places stringent obligations on high-risk AI systems:

- Risk categorisation, distinguishing between unacceptable, high, limited, and minimal-risk AI systems

- Strict requirements for high-risk use cases, including medical devices, hiring, credit scoring, critical infrastructure, and law enforcement

- Mandatory logging and traceability, enabling post-hoc audits of AI decisions

- Technical documentation and data quality controls, covering training data, design choices, and performance characteristics

- Human oversight and conformity assessments are required before deployment

- Central registration, with high-risk systems listed in an EU-wide database

For limited-risk systems such as chatbots or deepfake generators, transparency obligations apply, including disclosure of AI interaction and labelling of synthetic content. Together, these requirements make verifiable AI a prerequisite for accessing the EU market.

NIST AI Risk Management Framework and trustworthy AI

In the United States, regulatory influence is shaped less by a single law and more by standards frameworks. The NIST AI Risk Management Framework provides voluntary but highly influential guidance:

- Definition of trustworthy AI, including reliability, safety, security, resilience, accountability, transparency, explainability, privacy protection, and fairness

- Four lifecycle functions:

- Govern: establish policies, roles, and oversight

- Map: identify AI systems, contexts, and stakeholders

- Measure: assess risks, impacts, and performance

- Manage: prioritise and mitigate identified risks

- Govern: establish policies, roles, and oversight

- Cross-agency adoption, with US regulators referencing NIST guidance in enforcement and compliance activities

Verifiable AI aligns directly with this framework by supplying the artefacts — logs, lineage, metrics, and explanations — required to measure and manage AI risks in practice.

Industry Consortia: C2PA and responsible AI initiatives

Alongside governments, industry-led initiatives are defining practical standards for AI transparency and authenticity, particularly for generative and media-focused systems:

- Content authenticity standards, developed by the Content Authenticity Initiative and the Coalition for Content Provenance and Authenticity

- Cryptographically signed content credentials, allowing users to verify origin, creation method, and modification history

- Real-world adoption, including media organisations such as the BBC, and tooling integrations across major platforms

- Watermarking and provenance technologies, embedded into generative AI products by companies like Adobe and Google

- Broader responsible AI initiatives, including multi-stakeholder collaboration through groups like the Partnership on AI and international guidance such as the OECD AI Principles

These efforts complement regulation by providing interoperable, technically grounded mechanisms for transparency and accountability at scale.

Challenges and Future Outlook

Understanding these challenges helps organisations make informed, realistic decisions about when and how to invest.

Cryptographic techniques and secure hardware introduce overhead. Enabling TEEs on Nvidia confidential GPUs results in less than 7% performance impact for smaller models and near zero overhead for large models. Zero-knowledge proofs, however, remain computationally expensive: early ZKML prototypes incurred overheads of 1,000,000×, though this has fallen to tens of thousands× and is expected to drop further.

Businesses worry that disclosing model details or data sources will expose intellectual property or competitive advantages. Zero-knowledge proofs offer a solution: they enable stakeholders to verify that the correct model and data were used without revealing proprietary details.

TEEs protect model weights and data during computation, preventing theft while still producing verifiable logs. In content provenance, implementers must balance transparency with privacy; Splunk notes that digital provenance systems face challenges around user adoption, susceptibility to hacks, sensitive metadata exposure, and scaling. Organisations may adopt selective disclosure techniques to reveal only necessary information.

Verifiable AI is still in its early stages. The GS1 landscape report observes that the self-sovereign identity and verifiable credentials ecosystem lacks a dominant approach and that multiple standards and DID methods compete for adoption. Enterprises must choose among different credential formats, proof mechanisms, and transport protocols, creating interoperability challenges.

Meanwhile, the ZKML field continues to evolve; new hardware accelerators, proof systems, and developer tools are reducing cost and complexity. Widespread adoption will require open standards, accessible tooling, education, and regulatory incentives. As the CIO article emphasizes, making verifiability a non-negotiable criterion for AI procurement and building a culture of skepticism and accountability are critical for mainstream adoption.

What Does SaM Solutions Offer?

SaM Solutions is a global software development company with over 30 years of experience and a proven track record of delivering AI-powered systems across industries. Its AI services emphasise end-to-end support — from strategy and AI consulting through development and integration to maintenance — ensuring that AI solutions align with clients’ architecture, data privacy requirements, and business goals.

Ready to implement AI into your digital strategy? Let SaM Solutions guide your journey.

Conclusion

AI is transforming every facet of business and society, but its benefits will only be realised if stakeholders can trust the underlying systems. Verifiable AI provides the framework to build that trust. By combining explainable models, comprehensive audit trails, data lineage, cryptographic guarantees, and secure hardware, organisations can prove that their AI behaves ethically, lawfully, and reliably.

FAQ

Not necessarily. Verifiable AI is about adding layers of transparency and proof to your existing workflows. You may need to wrap your models in secure hardware (TEEs) or generate cryptographic proofs of inference, but you don’t always need to retrain or replace the model itself.

![15 Best AI Tools for Java Developers in 2026 [with Internal Survey Results]](https://sam-solutions.com/wp-content/uploads/fly-images/18712/title@2x-6-366x235-c.webp)